Automatic descriptive classification of self-reported traumatic experiences: A primer and example in applying a pretrained large language model to study psychological phenomenology.

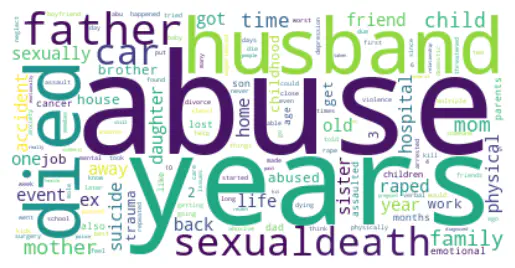

Example frequency of words across negative experience self-reports

Example frequency of words across negative experience self-reports

A large cohort of N=1,473 individuals recruited through Google Ads for pre-screening as part of a larger study on major depressive disorder (MDD) is being used. Written responses to an open-ended prompt regarding the worst event experienced are descriptively classified via a novel 31-item inventory which allows for structured qualitative coding. Written responses are applied to a pre-trained large language model (BERT) to automatically generate latent representations of these self-reported experiences. A dimensionally reduced form of these latent representations are then used as features to train, validate, and test a series of classification-based machine learning models which, in total, summarize key themes of the reported negative experience. This pipeline is then used to explore the relative importance of different aspects of negative experiences (e.g., who was affected, who was responsible, when did the experience occur, what trauma was sustained, was the event personally witnessed) by treating them as features in a new set of models tasked with predicting PCL-5-related outcomes.

The primary goal of this work is to provide a detailed example of how pretrained large language models can be leveraged to profile mental health and address questions in psychological science using real-world data. Through highlighting and explaining major decision points along the way, from data preprocessing to results interpretation, this work may serve as a starting point for researchers to employ large language models in their own work.